Trial of Psilocybin versus Escitalopram for Depression: An Compilation of Analyses

Friday Journal Club #6

Few things before we begin:

Ayyy I’m back. Happy belated Bicycle Day, 4/20, and Earth Day. I hope you celebrated at least one, if not all, of these holidays.

Big stuff happening in the psychedelic world! Today we’re going to explore the hubbub surrounding the recent head-to-head psilocybin versus escitalopram study that was released in the New England Journal of Medicine last week.

I will be joining some kool kats on Clubhouse tonight to converse broadly about drugs & society. Come join! Link at the bottom of the newsletter.

Follow me on Twitter @TyQuig and Instagram @thetab_psychedelicscience”

Reminder: Part of this newsletter is 24/7 office hours. If you have a question about psychedelic science, send it my way.

Let’s go:

Trial of Psilocybin versus Escitalopram for Depression: An Compilation of Analyses

April 7th-14th, 2021

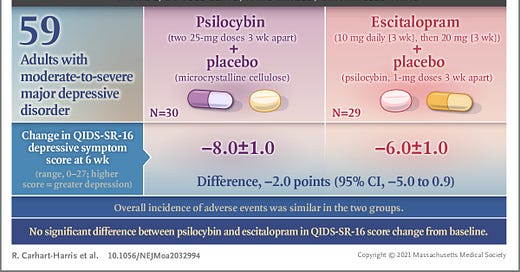

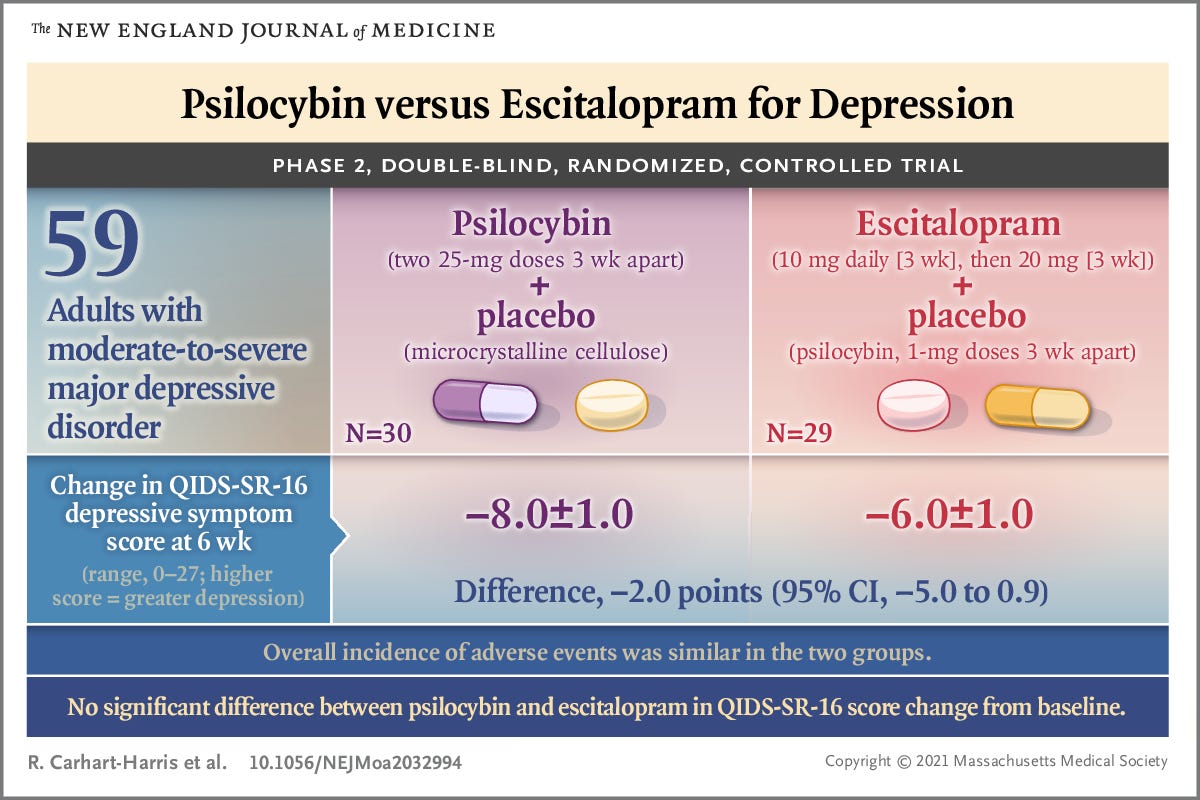

Prominent psychedelic researcher Dr. Robin Carhart-Harris begins an 8-day Twitter-tease of information about a study to be published in acclaimed journal, The New England Journal of Medicine. His tantalizing thread begins with details on experimental design, i.e., how many participants, dosing regiment, recruitment strategy, primary and secondary outcome measurements, etc.

This quickly evolves into a savory fish-themed lesson on confidence intervals, and the importance of defining one’s hypotheses, predictions, and statistical analysis prior to beginning a study. This is especially crucial, and required, for this Phase 2 trial assessing the efficacy of psilocybin for the treatment of depression.

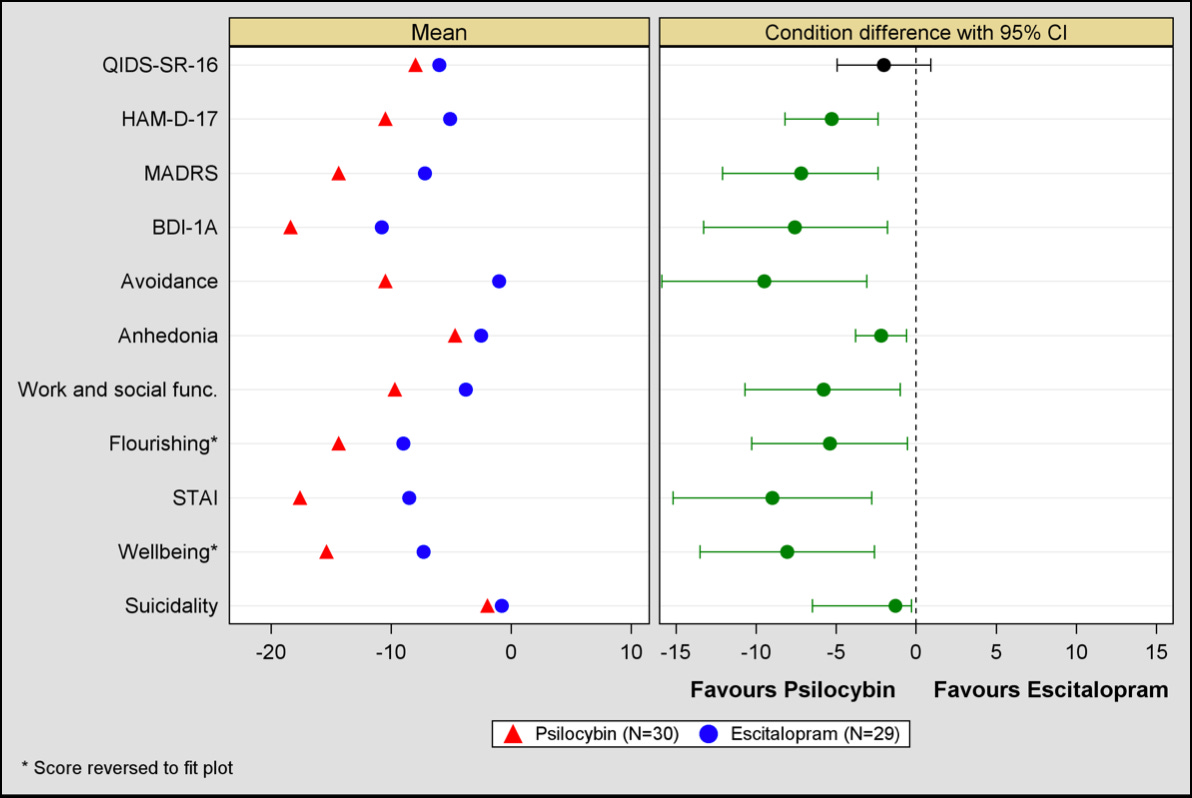

On Day 6, Dr. Carhart-Harris posts an “example” forest plot to show how confidence intervals are reported. Deprived of labels, the plot shows a mysterious set of data, each segment of which seems significant, except for one.

Was it a randomly drawn up plot to demonstrate how confidence intervals are reported? No. This very plot would be released, with labels, in the supplementary data of the very paper the thread is about. The single insignificant segment of data on this plot would turn out to be the primary measure of the study. This is the only piece of data they are allowed to use to assess their hypothesis. Thus, the main finding of the study is that psilocybin is not superior to escitalopram in treating depression.

The other segments of data were all secondary measures, not permitted for use in assessing the main hypotheses, even though psilocybin seemed to perform better on every single one. While these data are just as tantalizing as Dr. Carhart-Harris’ extended-release twitter thread, they cannot technically be used to say anything definitive about psilocybin due to the study’s unfortunate design. More on this below.

The aforementioned thread:

The main figure from the supplementary data:

April 14th, 2021-present:

Following the study’s release, a tidal wave of thoughts, opinions, and analyses whipped through the feeds and inboxes of those of us tuned into psychedelic news. The best of these pieces are authored by communicators with bigger brains than I. Rather than say the same things as them but worse, I’ve summarized and linked the best analyses below so you can absorb the expert opinions for yourself.

First, check this full breakdown of the study at Blossom:

Now, understand that the media outlets reporting that psilocybin is “as good” as escitalopram are fundamentally false. The analysis used in this study tests specifically whether psilocybin is superior to escitalopram. Since the difference they observed in their primary measure of psilocybin vs escitalopram was not significant, the only conclusion that can be drawn is that psilocybin is not superior to escitalopram. This is inherently different than the message media outlets are reporting.

The next important point to understand is that, while psilocybin performed better than escitalopram on every other measure they used, these measures are secondary measures and have not been corrected for multiple comparisons. When a researcher registers their study as a clinical trial, they must state which measurement should be considered the most important (primary). The primary measure for this study was a 16 item questionnaire called QIDS-SR-16, which drug regulators have accepted in the past as a valid measurement of depression. The other measurements they took are secondary and cannot be used to draw conclusions. This rule is in place to prevent researchers from running a bunch of analyses in the hopes that one will support their hypothesis. Keeps ‘em honest. Anyway, these researchers happened to pick the one measurement that ended up being non-significant, even though it is not necessarily better than the other secondary measures they performed.

You could draw your own conclusion about psilocybin from the secondary measures in the supplemental data. However, since so many of these measures were made, they are supposed to be corrected for multiple comparisons. This means that the standard for establishing significance should be tightened to weed out false positives due to tests that measure overlapping phenomena (i.e. testing for depression and anxiety might be measuring the same thing). The researchers did not register a test for multiple comparisons, so they didn’t perform one. What looks significant in the supplementary data, might not be.

This study has also raised questions about the general efficacy of the tests psychiatry uses to assess depression, and whether these are even less suited for psilocybin. The primary measure in this study is a 16 item questionnaire, which seems...low. More commentary here:

In sum, this study is inconclusive AF, but promising and informative for a Phase 3 trial on psilocybin and depression.

Other generally weird things about this study:

The trail of teasers Carhart-Harris laid down on Twitter before the study was published was a bit strange, but I imagine he wanted to pre-allay some of the inevitable confusion surrounding the study design. Or maybe it was for that sweet sweet retweet dopamine high. Either way, proper and impactful research should be able to stand alone, especially in a field where people are paying close attention to every new morsel of information that is released.

On a related note, from Dr. Kevin Conway, Emeritus Professor of Applied Statistics, The Open University, on Science Media Center:

“The one aspect of his Twitter thread that I really don’t like is where he describes the analysis and presentation of the results in the NEJM paper as being particularly conservative, because they follow established conventions of giving primacy to the pre-registered primary outcome measure, and of taking great care in interpreting secondary outcome measures when there is a large number of them. Those conventions exist for good reasons, as I’ve explained (and as indeed he also explains). But when he writes, in relation to the comparisons of the secondary measures, that “Values are just values, free of any narrative”, he’s simply wrong, in my view. The values are numbers that emerged from particular choices, made by him and the other researchers on this trial, on how to design and register a clinical trial, and on further choices about how to analyse the data. Looking at the numbers in isolation as if the numbers can stand for themselves is seriously misleading.”

This study was registered in America and the EU. The American registration has fMRI data as the primary measure, while the EU registration lists the QIDS-SR-16 as the primary measure and fMRI and other tests as secondary measures. However, the NJEM article only lists the American registration, but no fMRI data. The fMRI data is apparently still being analyzed by the research group.

From Dr. Boris Heifets, Assistant Professor, Stanford School of Medicine, in Psilocybin Alpha:

“The statistical analysis plan and calculation of how many subjects to recruit reads exactly as one would expect for a study that expects a massive difference between the two treatments....If the authors really believed from the get-go that these treatments would be similar, they could have designed the trial to test for equivalence, and they would have analyzed it accordingly. What we are left with is very difficult to interpret, except to say that we need to do it again with many more subjects.”

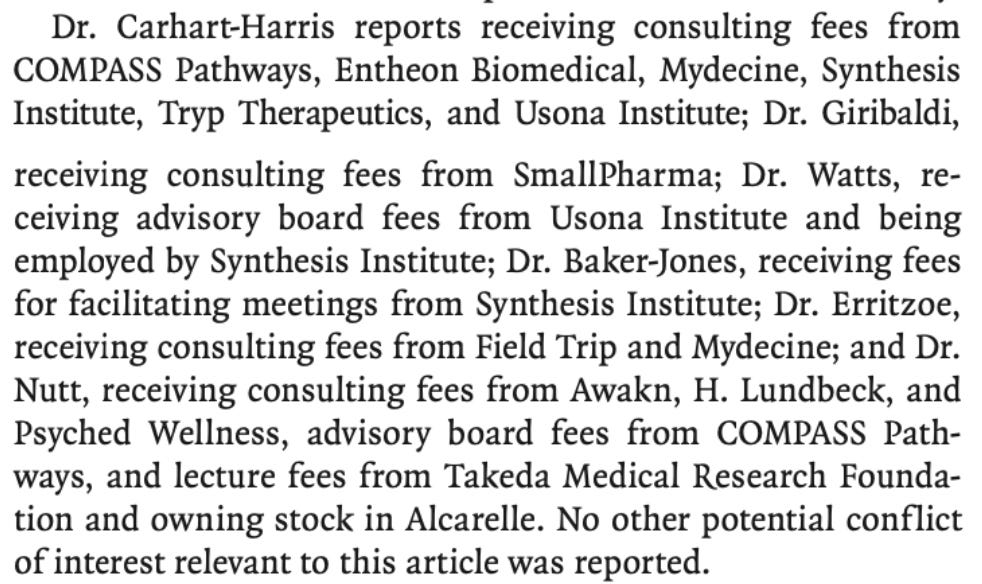

The conflicts of interest in this study are borderline egregious:

Must read analyses of this study:

Expert reaction to phase 2 trial comparing psilocybin and escitalopram for depression

Expert Commentary on the Highly-Anticipated SSRI vs. Psilocybin Publication

First-of-its-kind study pits psilocybin against a common antidepressant

Inside the experiment that could bring psychedelic drugs to the NHS

Commentary directly from Dr. Carhart-Harris:

Psychedelics are transforming the way we understand depression and its treatment

Drug Science Podcast #34 Psilocybin vs Antidepressants with Dr. Robin Carhart-Harris

I appreciate your feedback on how I did breaking down this science. Let me know in the comments:

Think more people should know about psychedelic science? Share my newsletter with your people, because your people are my people ✌🏽.

📃 Here’s the paper:

Carhart-Harris, R. et al. Trial of Psilocybin versus Escitalopram for Depression. N. Engl. J. Med.384, 1402–1411 (2021).

Main article available here.

Supplementary data available here.

Join us on Clubhouse tonight at 7:30 EST in conversation about drugs & society

Great summary here. I especially appreciate learning about the researcher's conflicts of interest.